Effortless Character Control and Real-Time Diverse Movement

Real-time character control is the holy grail in computer animation, playing a pivotal role in creating immersive and interactive experiences. Despite significant advances in this area, real-time character control continues to present several key challenges. These include generating visually realistic motions of high quality and diversity, ensuring controllability of the generation, and attaining a delicate balance between computational efficiency and visual realism.

In gaming, for example, consider the non-player characters (NPCs) and how they have been limited to exhibiting monotonous movements due to their pre-programmed reliance on a limited set of animations. These characters often repeat the same motions, lacking individuality and realism.

Indeed, to achieve more dynamic characters carrying out realistic motions in the virtual world, a global team of computer scientists has created a novel computational framework that overcomes these key obstacles. The team, comprising researchers from the Hong Kong University of Science and Technology, the University of Hong Kong, and Tencent AI Lab-China, will present a new character control framework that effectively utilizes motion diffusion probabilistic models to generate high-quality and diverse character animations, responding in real-time to a variety of dynamic user-supplied control signals.

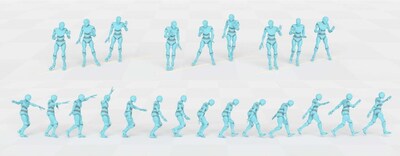

At the core of their method, say the researchers, is a transformer-based Conditional Autoregressive Motion Diffusion Model (CAMDM), which takes as input the character's historical motion and can generate a range of diverse potential future motions, in real time. Their method can generate seamless transitions between different styles, even in cases where the transition data is absent from the dataset.

"With this new technique, we're on the verge of witnessing a more realistic digital world. This world will be populated with thousands of digital humans empowered by our technique, each exhibiting unique features and diverse movements," Xuelin Chen, project lead of the research and senior researcher at Tencent AI Lab, says. "In this immersive digital world, every digital human is an individual with distinct characteristics, and we can control our own unique virtual characters to navigate and interact with them."

The team is currently working with industry to incorporate their technique into a video game product, with an expected launch date in late 2024. In future work, they intend to expand their method to be applied to a few key areas, including animation and film and to enhance virtual reality user experiences. At SIGGRAPH this year, Chen will be joined with co-authors and collaborators Rui Chen and Ping Tan from Hong Kong University of Science and Technology; Mingyi Shi and Taku Komura at University of Hong Kong; and Shaoli Huang, who is also with Tencent AI Lab. For the full paper and video, visit the team's page.

This research is a small preview of the vast Technical Papers research to be shared at SIGGRAPH 2024. Visit the Technical Papers listing on the full program to discover more generative AI content and beyond.

About ACM, ACM SIGGRAPH, and SIGGRAPH 2024

ACM, the Association for Computing Machinery, is the world's largest educational and scientific computing society, uniting educators, researchers, and professionals to inspire dialogue, share resources, and address the field's challenges.

ACM SIGGRAPH is a special interest group within ACM that serves as an interdisciplinary community for members in research, technology, and applications in computer graphics and interactive techniques. The SIGGRAPH conference is the world's leading annual interdisciplinary educational experience showcasing the latest in computer graphics and interactive techniques.

SIGGRAPH 2024, the 51st annual conference hosted by ACM SIGGRAPH, will take place live 28 July–1 August at the Colorado Convention Center, along with a virtual access option.

![]() View original content to download multimedia:

https://www.prnewswire.com/news-releases/creativity-and-innovation-at-the-intersection-of-ai-computer-graphics-and-design-302185164.html

View original content to download multimedia:

https://www.prnewswire.com/news-releases/creativity-and-innovation-at-the-intersection-of-ai-computer-graphics-and-design-302185164.html

SOURCE SIGGRAPH

| Contact: |

| Company Name: SIGGRAPH

Michelle Ellis, 720.432.8130, michelle@ellis-comms.com |